Okay, real talk. Machine Translation is kinda wild these days. You throw in some English text, click a button, and boom, Spanish, Arabic, Japanese, whatever. Sounds like sci-fi, right? But here’s the plot twist: just because the words look right doesn’t mean they actually… make sense. And that, my friend, is where Quality Assessment in Machine Translation swoops in like a superhero. Think about it: your content could be technically correct, but if it sounds robotic, stiff, or totally off-brand, people notice. I’ve seen it. Once, we translated a marketing campaign into Arabic. BLEU scores? High. Readability? Meh. The vibe? Nonexistent. Machines are fast, but humans? Humans bring the soul.

Why You Can’t Just Trust a Robot

Look, I get it. You’re thinking, “Machine’s got this, right?” Sure…for some stuff. But language is messy. Slang, culture, jokes, machines stumble here, like, all the time. Imagine a French company trying to sell smoothies in Saudi Arabia and the machine translates “super fresh” literally. People would probably blink twice, maybe even side-eye your brand.

Pro translators usually mix three things: human review, AI metrics, and context checks. Human review = someone actually reading the text. AI metrics = things like BLEU, METEOR, TER…basically nerd scores. Context checks = making sure “super fresh” actually vibes with your audience. Skip this, and, honestly, you’re playing Russian roulette with your brand.

Metrics That Actually Matter

Okay, let’s nerd out a sec but not too much.

- BLEU Score: Compares your machine output to references. Works fine for formal text, but creative stuff? Yeah, forget it.

- METEOR: Kinda like BLEU’s cooler sibling. Gets synonyms and grammar quirks. “Run” = “running,” easy.

- TER: Shows how much a human has to edit. Lower = better.

Cool metrics, sure. But they won’t catch tone, vibe, or if your “funny” line sounds like your grandma wrote it. That’s why Quality Assessment in Machine Translation is a mix of numbers and human magic. Machines are fast, humans are subtle. Combine them, and you basically get translation gold.

The Essential Role of Post-Editing in Quality Assessment in Machine Translation

Making the machine give an output does not represent the end of the process. The next step in quality assessment of machine translation is an important, but underestimated step: MTPE –Machine Translation Post-Editing. In this case, professional linguists look through the raw output of the MT and fix the mistakes, add or modify the tone, and make it culturally acceptable. They do not simply correct grammar; they re-construct sentences so as to express the required emotion, brand voice, and persuasive power. In the absence of this human-based post-editing, even the most sophisticated neural machine translation will still lead to the presentation of technical but strategically ineffective content, which will never appeal to or convince the target audience.

Implementing a Scalable Framework.

Scaling organizations. When organisations scale their translation activities, it is non-negotiable that a systematic quality assessment framework is built. This includes setting explicit quality measures (edit distance, typology of errors) and developing language-specific style manuals, as well as feedback loops, in which corrections by post-editors part of the MT engines are retrained and enhanced with time. This is because quality assessment is a cyclic process that is not a one-off check, but rather the business attains great synergy, which is the efficiency and speed of automation, ever-improved by human knowledge, to ensure that each translation reinforces the brand and connects with the audience on a personal level.

The Reality Check

Here’s the thing: mistakes happen. A client automated product descriptions. Result? “Lightweight jacket” turned into “feeble coat” in one market. Oops. Another example: Korean drama subtitles. Machines nailed the words, but jokes? Gone. Fans noticed. Angry fans online? Brutal.

So yeah, if you skip proper assessment, you might save time…but lose credibility. Human review + AI check + context = fewer facepalms and more happy readers. Honestly, it’s not rocket science. It’s just smart.

The Sweet Spot: Humans + Machines

Hybrid approach = the vibe. Machines for speed, humans for finesse. You get accurate, readable, and culturally aware translations. In my experience, this combo drops errors by like 40% compared to machine-only stuff.

Metrics, reviews, and feedback are all part of the puzzle. Treat Quality Assessment in Machine Translation like cooking. Machines toss ingredients together. Humans taste, tweak, and make it delicious. No tasting? You might serve…instant regret.

Conclusion

Translation isn’t just swapping words. It’s delivering meaning, emotion, and intent. Machines are cool, but without proper checks, your content could come off awkward or even wrong. Effective Quality Assessment in Machine Translation is your secret weapon. Don’t skip it.

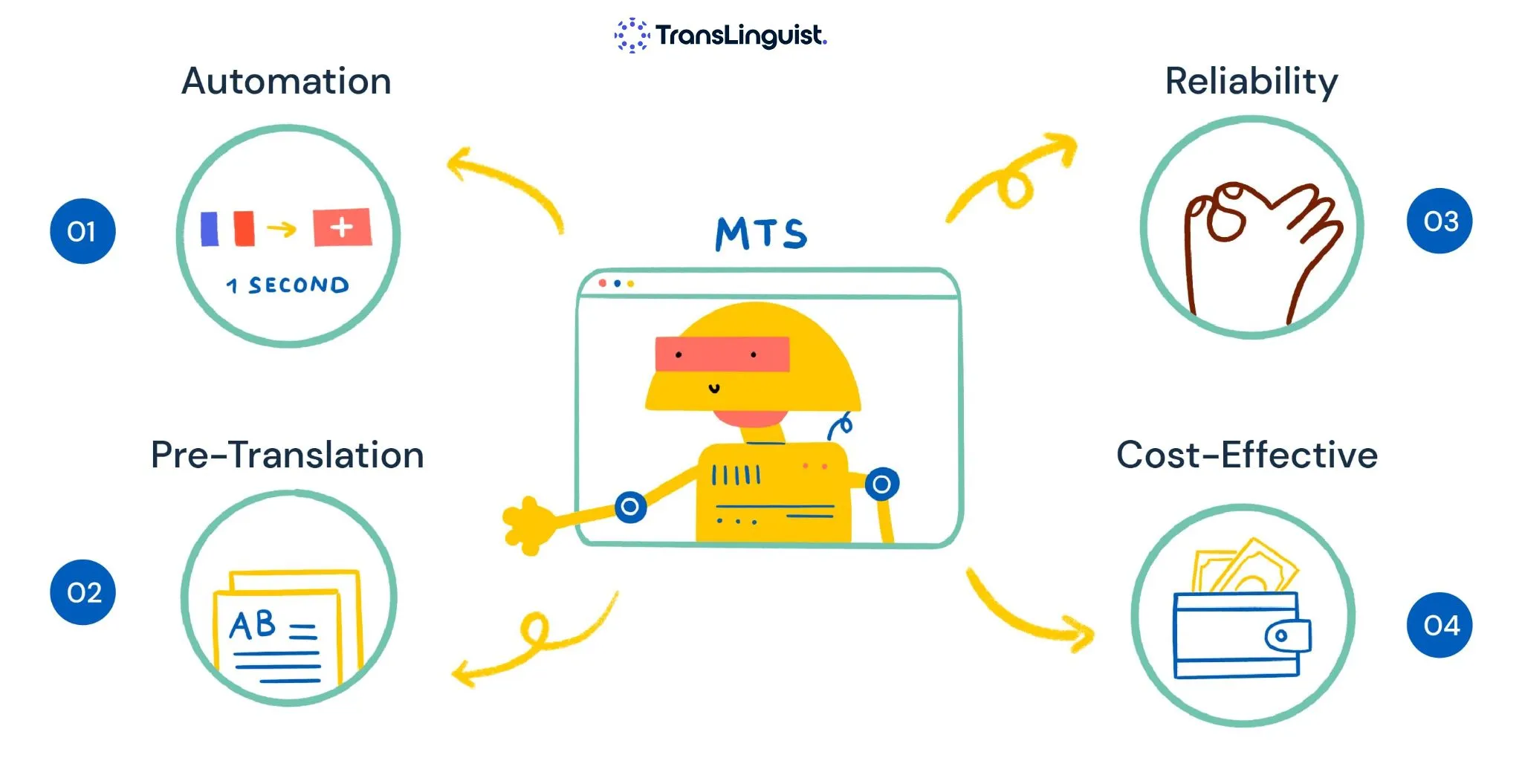

Want to make your content hit different globally? At TransLinguist, we turn translations into experiences. Fast, precise, and actually human-sounding. Whether you’re going big internationally or just trying to make your brand pop in another language, we’ve got you. Go ahead, check us out today!

FAQs

What exactly is quality assessment in machine translation?

It’s basically checking if a machine’s translation actually makes sense, reads naturally, and doesn’t sound like a robot wrote it.

Do machines do a good job on their own?

Sometimes, but they’ll miss vibes, jokes, and context—humans still gotta back them up.

What’s better: BLEU, METEOR, or TER?

All of them are cool for scoring, but none replace a human’s eyes on the text.

Can bad translations hurt my brand?

Totally. One awkward line and suddenly your brand looks clueless. Yikes.

How do pros make machine translations work?

Hybrid magic: let AI do the heavy lifting, then humans tweak, fix, and make it actually readable.